High signal environments

for agents

Curated by human experts from diverse, high-value professional domains. All tasks are verifiable, expert verified, using real data — things humans would get paid to do.

Our Work

What we've built

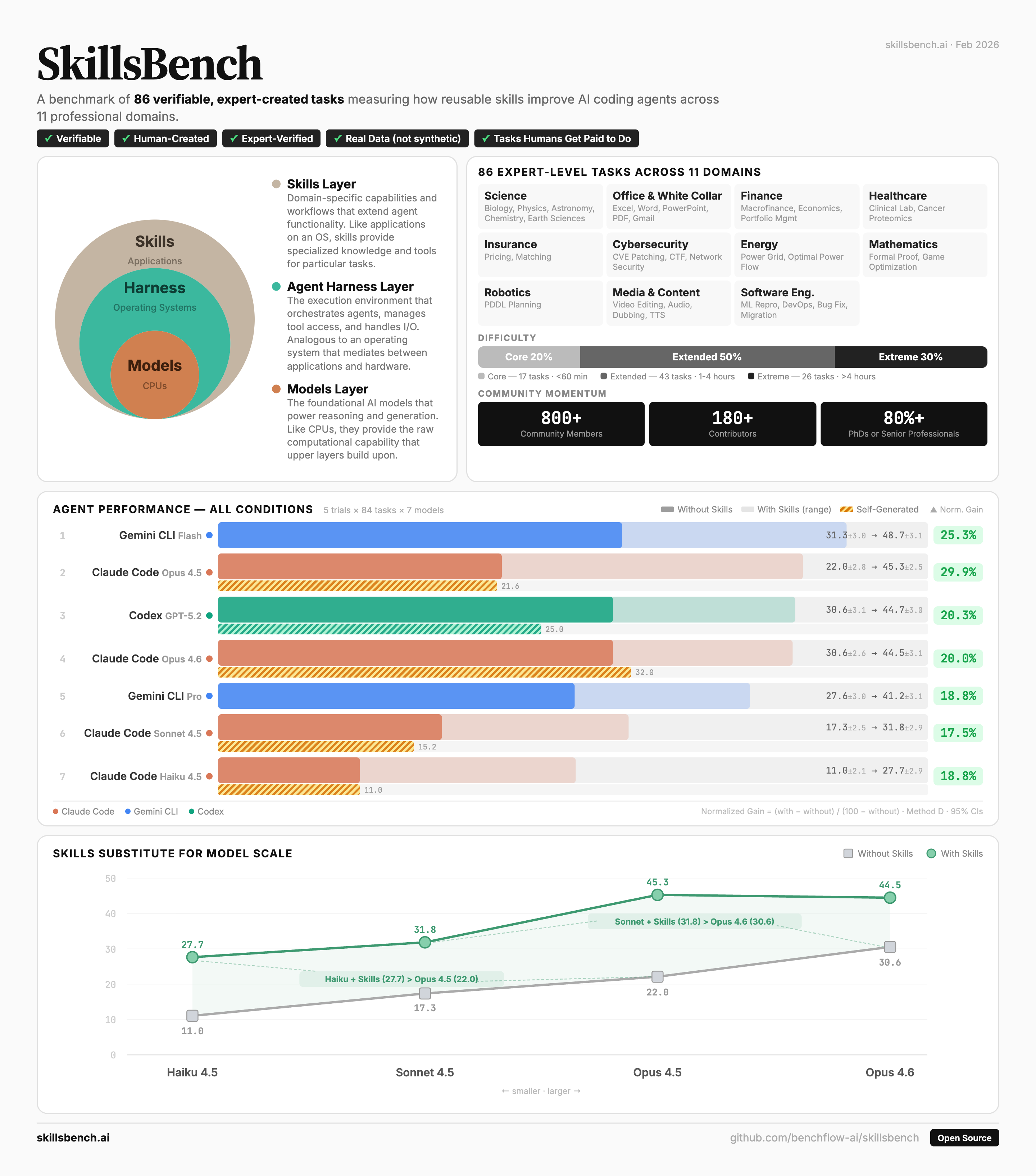

SkillsBench

The first evaluation framework measuring how skills (custom instructions) work for AI agents. 84 expert-curated tasks across diverse, high-GDP-value domains. The first dataset measuring how powerful models are at using skills.

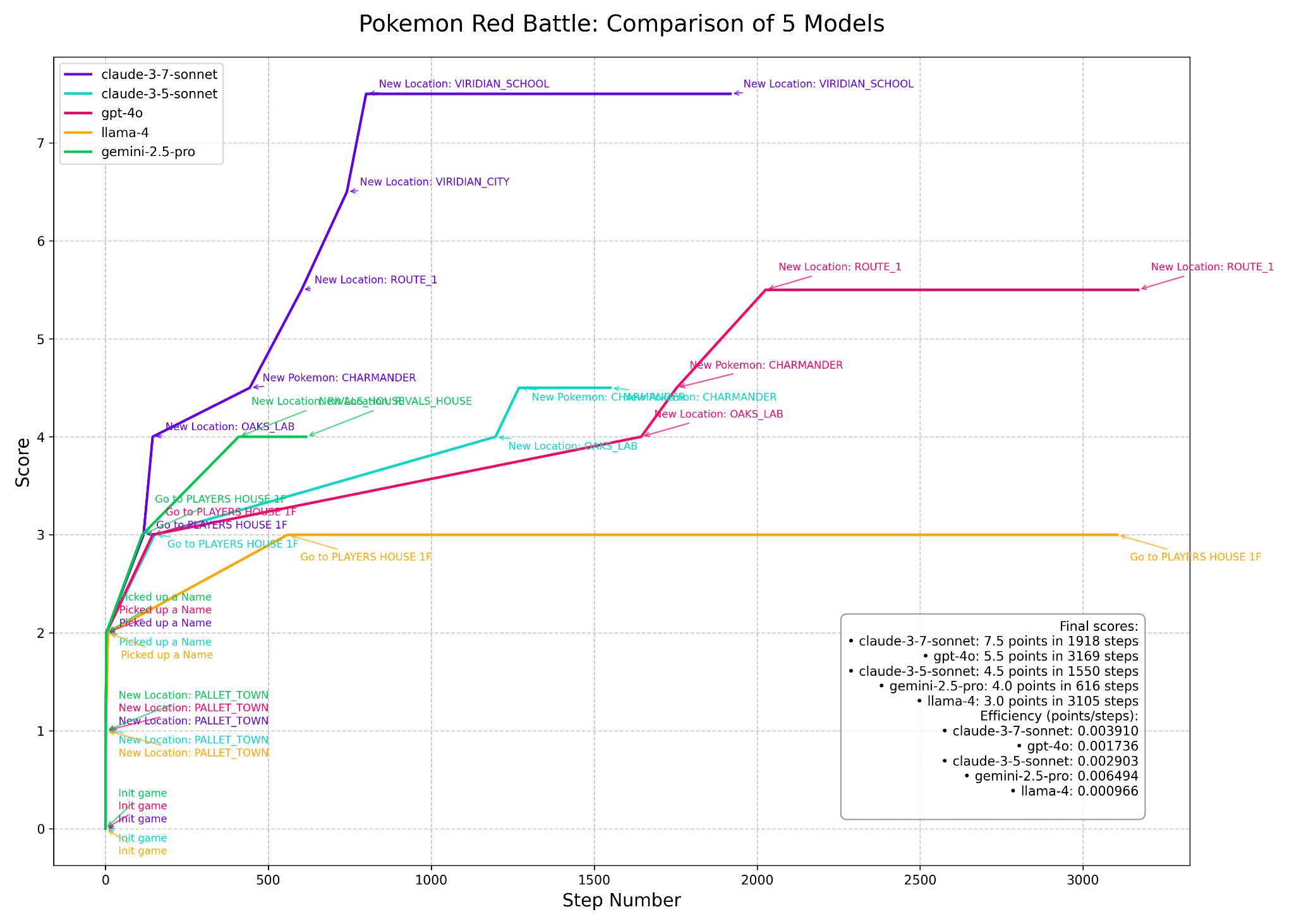

PokemonGym

First open-source harness for any LLM and agent to play Pokemon Red and Blue. Tests vision, reasoning, planning, memory, and sequential decision-making. Featured in the Gemini model launch.

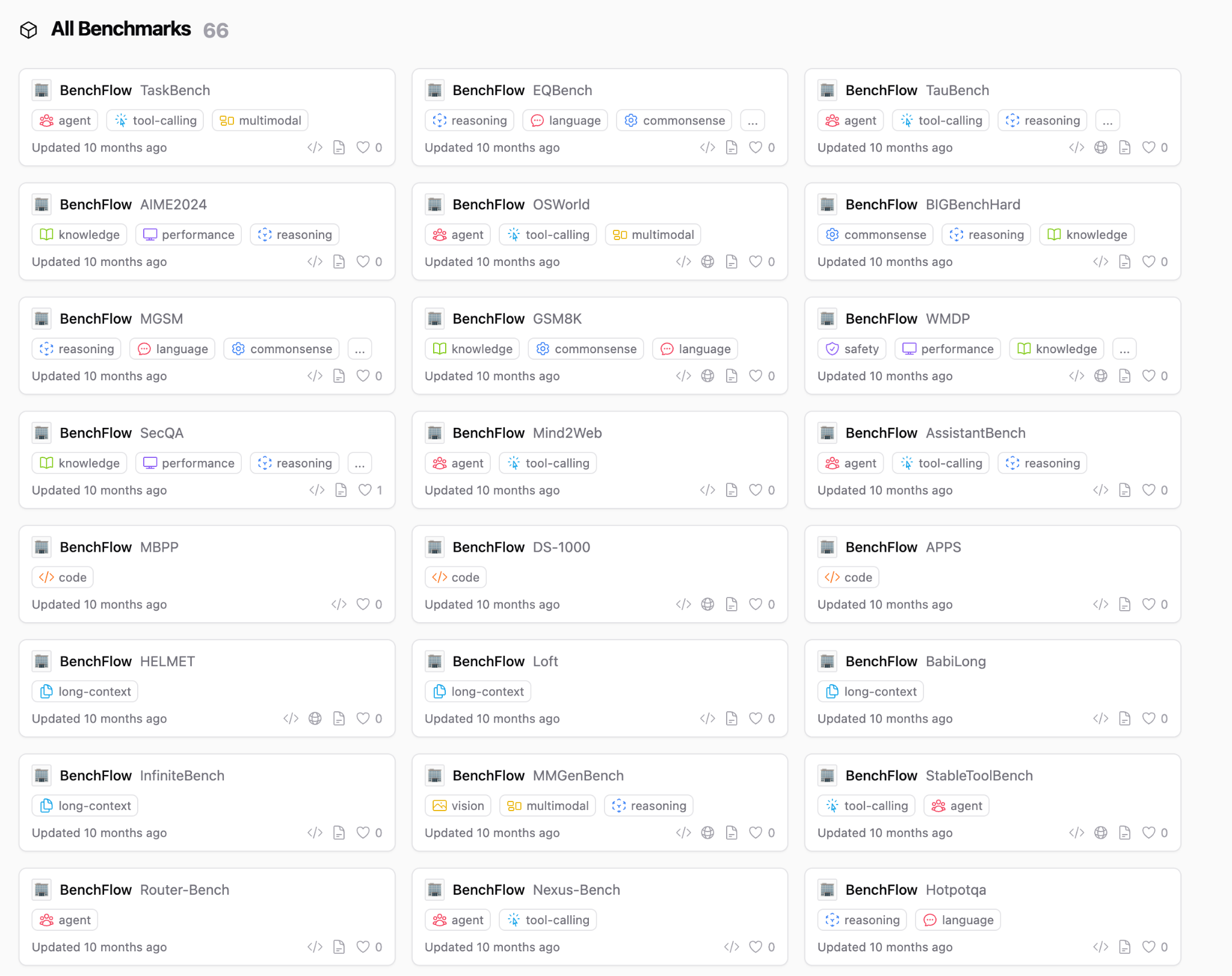

BenchFlow Hub & Runtime

The first protocol for agent and benchmark unification. HuggingFace for benchmarks and RL environments. One-line setup for 60+ benchmarks spanning NLP, web agents, code, medical AI, and more.

Backed by

Jeff Dean

Arash Ferdowsi

Dropbox

Eugene Yan

Amazon

Founders, Inc.

A16z

Scout Fund