BenchFlow Hub & Runtime

Universal Benchmark Protocol

December 2024

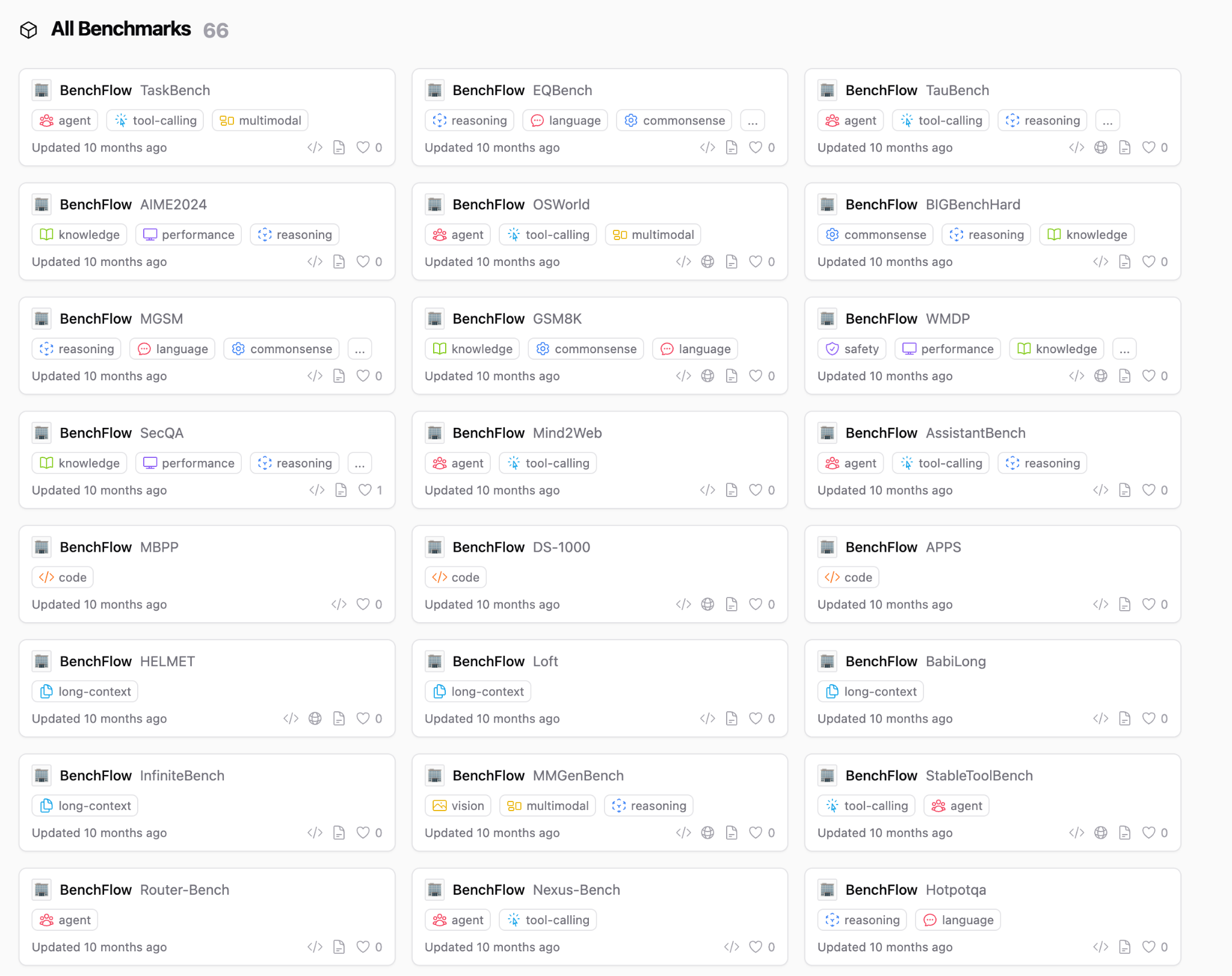

60+

Benchmarks

179

Stars

1 line

Setup

BenchFlow Hub & Runtime is the first unified protocol for agent and benchmark integration — think HuggingFace, but for benchmarks and RL environments. It provides a standardized way to discover, configure, and run benchmarks across the entire AI evaluation ecosystem.

With a single line of setup, researchers and developers can access 60+ integrated benchmarks spanning NLP, web agents, code generation, medical AI, cybersecurity, and more. Each benchmark is containerized with Docker for reproducible evaluation.

The Hub serves as a central registry where benchmark authors can publish their evaluations and agent developers can discover relevant tests. The Runtime handles all the complexity of environment setup, dependency management, and result collection.

Gallery

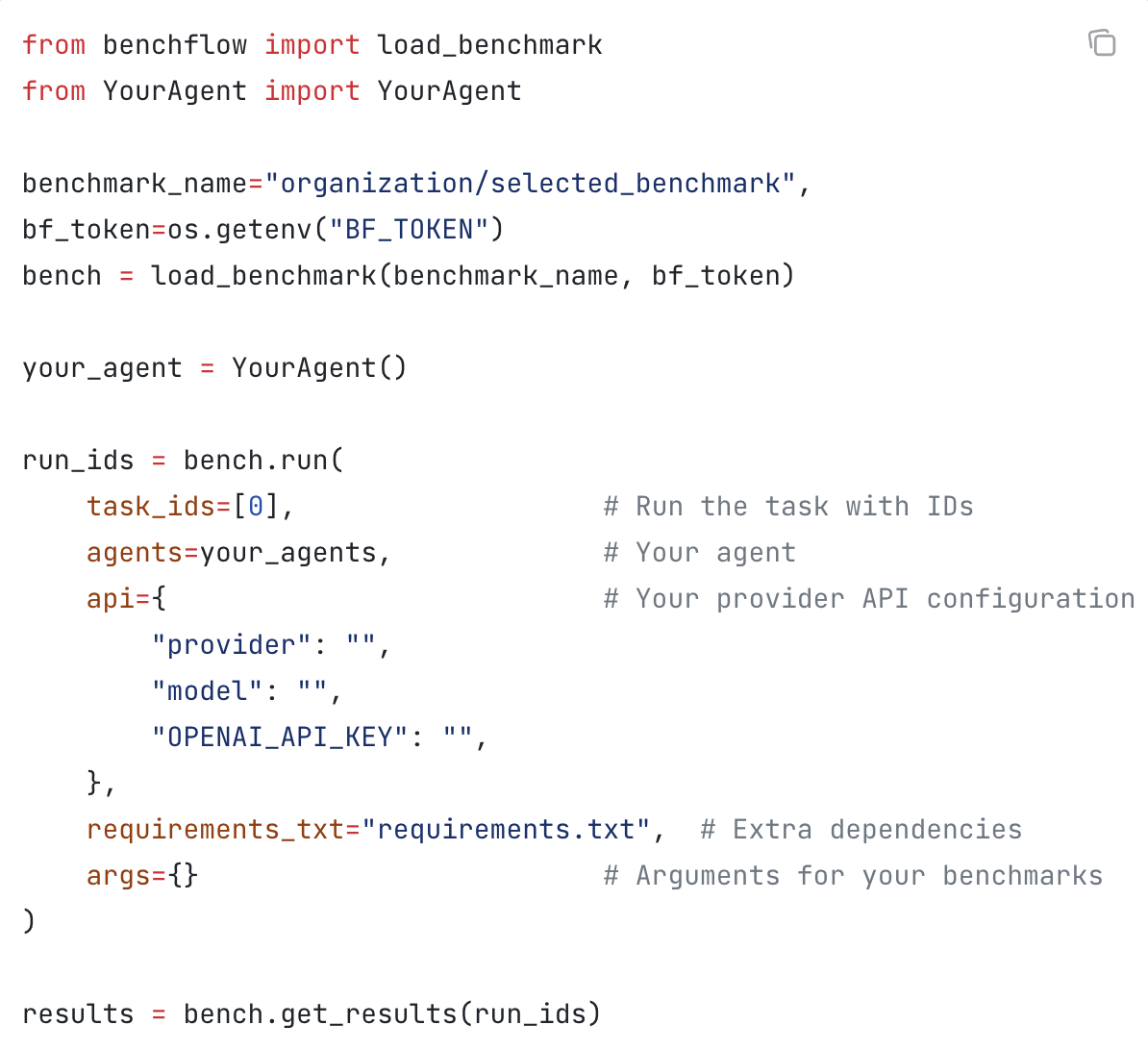

Run any benchmark with a few lines of Python

Links